100 Useful Command-Line Utilities

A Guide to 100 (ish) Useful Commands on Unix-like systemsby Oliver; 2014

Introduction

In An Introduction to the Command-Line (on Unix-like systems) [1], which I'll borrow heavily from in this article, we covered my subjective list of the 20 most important command line utilities. In case you forgot, it was:- pwd

- ls

- cd

- mkdir

- echo

- cat

- cp

- mv

- rm

- man

- head

- tail

- less

- more

- sort

- grep

- which

- chmod

- history

- clear

A few of the commands, mostly clustered at the end of the article, are not standard shell commands and you have to install them yourself (see apt-get, brew, yum). When this is the case, I make a note of it.

[1] Note: Unix-like systems include Linux and Macintosh ↑

pwd

To see where we are, we can print working directory, which returns the path of the directory in which we currently reside:$ pwdSometimes in a script we change directories, but we want to save the directory in which we started. We can save the current working directory in a variable with command substitution:

d=$( pwd ) # save cwd cd /somewhere/else # go somewhere else # do something else cd $d # go back to where the script originally startedIf you want to print your working directory resolving symbolic links:

$ readlink -m .

cd

To move around, we can change directory:$ cd /some/pathBy convention, if we leave off the argument and just type cd we go HOME:

$ cd # go $HOME

Go to the root directory:

$ cd / # go to root

Move one directory back:

$ cd .. # move one back toward root

To return to the directory we were last in:

$ cd - # cd into the previous directory

What if you want to visit the directory you were in two or three directories ago?

The utilities pushd and popd keep track of the directories you move through in a stack.

When you use:

$ pushd some_directoryIt acts as a:

$ cd some_directoryexcept that some_directory is also added to the stack. Let's see an example of how to use this:

$ pushd ~/TMP # we're in ~/ ~/TMP ~ $ pushd ~/DATA # now we're in ~/TMP ~/DATA ~/TMP ~ $ pushd ~ # now we're in ~/DATA ~ ~/DATA ~/TMP ~ $ popd # now we're in ~/ ~/DATA ~/TMP ~ $ popd # now we're in ~/DATA ~/TMP ~ $ popd # now we're in ~/TMP ~ $ # now we're in ~/This is interesting to see once, but I never use pushd and popd. Instead, I like to use a function, cd_func, which I stole off the internet. It allows you to scroll through all of your past directories with the syntax:

$ cd --Here's the function, which you should paste into your setup dotfiles (e.g., .bash_profile):

cd_func () { local x2 the_new_dir adir index; local -i cnt; if [[ $1 == "--" ]]; then dirs -v; return 0; fi; the_new_dir=$1; [[ -z $1 ]] && the_new_dir=$HOME; if [[ ${the_new_dir:0:1} == '-' ]]; then index=${the_new_dir:1}; [[ -z $index ]] && index=1; adir=$(dirs +$index); [[ -z $adir ]] && return 1; the_new_dir=$adir; fi; [[ ${the_new_dir:0:1} == '~' ]] && the_new_dir="${HOME}${the_new_dir:1}"; pushd "${the_new_dir}" > /dev/null; [[ $? -ne 0 ]] && return 1; the_new_dir=$(pwd); popd -n +11 2> /dev/null > /dev/null; for ((cnt=1; cnt <= 10; cnt++)) do x2=$(dirs +${cnt} 2>/dev/null); [[ $? -ne 0 ]] && return 0; [[ ${x2:0:1} == '~' ]] && x2="${HOME}${x2:1}"; if [[ "${x2}" == "${the_new_dir}" ]]; then popd -n +$cnt 2> /dev/null > /dev/null; cnt=cnt-1; fi; done; return 0 }Also in your setup dotfiles, alias cd to this function:

alias cd=cd_funcand then you can type:

$ cd -- 0 ~ 1 ~/DATA 2 ~/TMPto see all the directories you've visited. To go into ~/TMP, for example, enter:

$ cd -2Changing the subject and dipping one toe into the world of shell scripting, you can put cd into constructions like this:

if ! cd $outputdir; then echo "error. couldn't cd into "${outputdir}; exit; fi

or, more succinctly:

cd $outputdir || exitThis can be a useful line to include in a script. If the user gives an output directory as an argument and the directory doesn't exist, we exit. If it does exist, we cd into it and it's business as usual.

ls

ls lists the files and directories in the cwd, if we leave off arguments. If we pass directories to the command as arguments, it will list their contents. Here are some common flags.Display our files in a column:

$ ls -1 # list vertically with one line per item

List in long form—show file permissions, the owner of the file, the group to which he belongs, the date the file was created, and the file size:

$ ls -l # long form

List in human-readable (bytes will be rounded to kilobytes, gigabytes, etc.) long form:

$ ls -hl # long form, human readable

List in human-readable long form sorted by the time the file was last modified:

$ ls -hlt # long form, human readable, sorted by time

List in long form all files in the directory, including dotfiles:

$ ls -al # list all, including dot- files and dirs

Since you very frequently want to use ls with these flags, it makes sense to alias them:

alias ls="ls --color=auto" alias l="ls -hl --color=auto" alias lt="ls -hltr --color=auto" alias ll="ls -al --color=auto"The coloring is key. It will color directories and files and executables differently, allowing you to quickly scan the contents of your folder.

Note that you can use an arbitrary number of arguments and that bash uses the convention that an asterik matches anything. For example, to list only files with the .txt extension:

$ ls *.txtThis is known as globbing. What would the following do?

$ ls . dir1 .. dir2/*.txt dir3/A*.htmlThis monstrosity would list anything in the cwd; anything in directory dir1; anything in the directory one above us; anything in directory dir2 that ends with .txt; and anything in directory dir3 that starts with A and ends with .html. You get the point!

ls can be useful in for-loops:

$ for i in $( ls /some/path/*.txt ); do echo $i; doneWith this construct, we could do some series of commands on all .txt files in /some/path. You can also do a very similar thing without ls:

$ for i in /some/path/*.txt; do echo $i; done

mkdir, rmdir

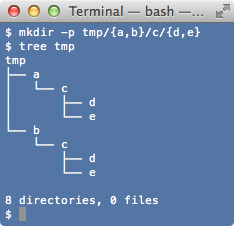

To make directory—i.e., create a new folder—we use:$ mkdir mynewfolderTo make nested directories (and don't complain if trying to make a directory that already exists), use the -p flag:

$ mkdir -p a/b/c # make nested directories

You can remove directory using:

$ rmdir mynewfolder # don't use this

However, since the directory must be empty to use this command, it's not convenient.

Instead, use:

$ rm -r mynewfolder # use this

Since rm has all the functionality you need, I know few people who actually use rmdir.

About the only occasion to use it is if you want to be careful you're not deleting a directory with stuff in it.

(Perhaps rmdir isn't one of the 100 most useful commands, after all.)

echo

echo prints the string passed to it as an argument. For example:$ echo joe joe

$ echo "joe" joe

$ echo "joe joe" joe joeIf you leave off an argument, echo will produce a newline:

$ echoSupress newline:

$ echo -n "joe" # suppress newline

Interpret special characters:

$ echo -e "joe\tjoe\njoe" # interpret special chars ( \t is tab, \n newline )

joe joe

joe

As we've seen above, if you want to print a string with spaces, use quotes.

You should also be aware of how bash treats double vs single quotes.

If you use double quotes, any variable inside them will be expanded (the same as in Perl).

If you use single quotes, everything is taken literally and variables are not expanded.

Here's an example:

$ var=5 $ joe=hello $var -bash: 5: command not foundThat didn't work because we forgot the quotes. Let's fix it:

$ joe="hello $var" $ echo $joe hello 5

$ joe='hello $var' $ echo $joe hello $varSometimes, when you run a script verbosely, you want to echo commands before you execute them. A std:out log file of this type is invaluable if you want to retrace your steps later. One way of doing this is to save a command in a variable, cmd, echo it, and then pipe it into bash or sh. Your script might look like this:

cmd="ls -hl"; echo $cmd; echo $cmd | bash

cat, zcat, tac

cat prints the contents of files passed to it as arguments. For example:$ cat file.txtprints the contents of file.txt. Entering:

$ cat file.txt file2.txtwould print out the contents of both file.txt and file2.txt concatenated together, which is where this command gets its slightly confusing name. Print file with line numbers:

$ cat -n file.txt # print file with line numbers

cat is frequently seen in unix pipelines.

For instance:

$ cat file.txt | wc -l # count the number of lines in a file $ cat file.txt | cut -f1 # cut the first columnSome people deride this as unnecessarily verbose, but I'm so used to piping anything and everything that I embrace it. Another common construction is:

cat file.txt | awk ...

We'll discuss awk below, but the key point about it is that it works line by line.

So awk will process what cat pipes out in a linewise fashion.

If you route something to cat via a pipe, it just passes through:

$ echo "hello kitty" | cat hello kittyThe -vet flag allows us to "see" special characters, like tab, newline, and carriage return:

$ echo -e "\t" | cat -vet ^I$ $ echo -e "\n" | cat -vet $ $ $ echo -e "\r" | cat -vet ^M$This can come into play if you're looking at a file produced on a PC, which uses the horrid \r at the end of a line as opposed to the nice unix newline, \n. You can do a similar thing with the command od, as we'll see below .

There are two variations on cat, which occasionally come in handy. zcat allows you to cat zipped files:

$ zcat file.txt.gzYou can also see a file in reverse order, bottom first, with tac (tac is cat spelled backwards).

cp

The command to make a copy of a file is cp:$ cp file1 file2Use the recursive flag, -R, to copy directories plus files:

$ cp -R dir1 dir2 # copy directories

The directory and everything inside it are copied.

Question: what would the following do?

$ cp -R dir1 ../../Answer: it would make a copy of dir1 up two levels from our current working directory.

Tip: If you're moving a large directory structure with lots of files in them, use rsync instead of cp. If the command fails midway through, rsync can start from where it left off but cp can't.

mv

To rename a file or directory we use mv:$ mv file1 file2In a sense, this command also moves files, because we can rename a file into a different path. For example:

$ mv file1 dir1/dir2/file2would move file1 into dir1/dir2/ and change its name to file2, while:

$ mv file1 dir1/dir2/would simply move file1 into dir1/dir2/ (or, if you like, rename ./file1 as ./dir1/dir2/file1).

Swap the names of two files, a and b:

$ mv a a.1 $ mv b a $ mv a.1 bChange the extension of a file, test.txt, from .txt to .html:

$ mv test.txt test.htmlShortcut:

$ mv test.{txt,html}

mv can be dangerous because, if you move a file into a directory where a file of the same name exists, the latter will be overwritten.

To prevent this, use the -n flag:

$ mv -n myfile mydir/ # move, unless "myfile" exists in "mydir"

rm

The command rm removes the files you pass to it as arguments:

$ rm file # removes a file

Use the recursive flag, -r, to remove a file or a directory:

$ rm -r dir # removes a file or directory

If there's a permission issue or the file doesn't exist, rm will throw an error.

You can override this with the force flag, -f:

$ rm -rf dir # force removal of a file or directory # (i.e., ignore warnings)You may be aware that when you delete files, they can still be recovered with effort if your computer hasn't overwritten their contents. To securely delete your files—meaning overwrite them before deleting—use:

$ rm -P file # overwrite your file then delete

or use shred.

shred

Securely remove your file by overwritting then removing:$ shred -zuv file # removes a file securely # (flags are: zero, remove, verbose)For example:

$ touch crapfile # create a file

$ shred -zuv crapfile

shred: crapfile: pass 1/4 (random)...

shred: crapfile: pass 2/4 (random)...

shred: crapfile: pass 3/4 (random)...

shred: crapfile: pass 4/4 (000000)...

shred: crapfile: removing

shred: crapfile: renamed to 00000000

shred: 00000000: renamed to 0000000

shred: 0000000: renamed to 000000

shred: 000000: renamed to 00000

shred: 00000: renamed to 0000

shred: 0000: renamed to 000

shred: 000: renamed to 00

shred: 00: renamed to 0

shred: crapfile: removed

As the man pages note, this isn't perfectly secure if your file system has made a copy of your file and stored it in some other location.

Read the man page here.

man

man shows the usage manual, or help page, for a command. For example, to see the manual for ls:$ man lsTo see man's own manual page:

$ man manThe manual pages will list all of the command's flags which usually come in a one-dash-one-letter or two-dashes-one-word flavor:

command -f command --flag

head, tail

Based off of An Introduction to the Command-Line (on Unix-like systems) - head and tail: head and tail print the first or last n lines of a file, where n is 10 by default. For example:$ head myfile.txt # print the first 10 lines of the file $ head -1 myfile.txt # print the first line of the file $ head -50 myfile.txt # print the first 50 lines of the file

$ tail myfile.txt # print the last 10 lines of the file $ tail -1 myfile.txt # print the last line of the file $ tail -50 myfile.txt # print the last 50 lines of the fileThese are great alternatives to cat, because often you don't want to spew out a giant file. You only want to peek at it to see the formatting, get a sense of how it looks, or hone in on some specific portion.

If you combine head and tail together in the same command chain, you can get a specific row of your file by row number. For example, print row 37:

$ cat -n file.txt | head -37 | tail -1 # print row 37

Another thing I like to do with head is look at multiple files simultaneously.

Whereas cat concatencates files together, as in:

$ cat hello.txt hello hello hello

$ cat kitty.txt kitty kitty kitty

$ cat hello.txt kitty.txt hello hello hello kitty kitty kittyhead will print out the file names when it takes multiple arguments, as in:

$ head -2 hello.txt kitty.txt ==> hello.txt <== hello hello ==> kitty.txt <== kitty kittywhich is useful if you're previewing many files. To preview all files in the current directory:

$ head *See the last 10 lines of your bash history:

$ history | tail # show the last 10 lines of history

See the first 10 elements in the cwd:

$ ls | head

less, zless, more

Based off of An Introduction to the Command-Line (on Unix-like systems) - less and more: less is, as the man pages say, "a filter for paging through text one screenful at a time...which allows backward movement in the file as well as forward movement." This makes it one of the odd unix commands whose name seems to bear no relation to its function. If you have a big file, vanilla cat is not suitable because printing thousands of lines of text to stdout will flood your screen. Instead, use less, the go-to command for viewing files in the terminal:

$ less myfile.txt # view the file page by page

Another nice thing about less is that it has many Vim-like features, which you can read about on its man page (and this is not a coincidence).

For example, if you want to search for the word apple in your file, you just type slash ( / ) followed by apple.

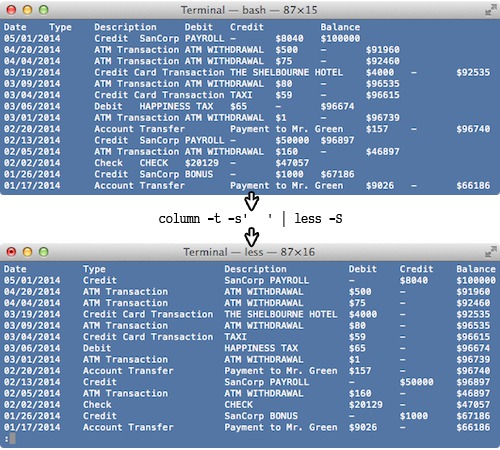

If you have a file with many columns, it's hard to view in the terminal. A neat less flag to solve this problem is -S:

$ less -S myfile.txt # allow horizontal scrolling

This enables horizontal scrolling instead of having rows messily wrap onto the next line.

As we'll discuss below, this flag works particularly well in combination with the column command, which forces the columns of your file to line up nicely:

cat myfile.txt | column -t | less -SUse zless to less a zipped file:

$ zless myfile.txt.gz # view a zipped file

I included less and more together because I think about them as a pair, but I told a little white lie in calling more indispensible: you really only need less, which is an improved version of more.

Less is more :-)

grep, egrep

Based off of An Introduction to the Command-Line (on Unix-like systems) - grep: grep is the terminal's analog of find from ordinary computing (not to be confused with unix find). If you've ever used Safari or TextEdit or Microsoft Word, you can find a word with ⌘F (Command-f) on Macintosh. Similarly, grep searches for text in a file and returns the line(s) where it finds a match. For example, if you were searching for the word apple in your file, you'd do:

$ grep apple myfile.txt # return lines of file with the text apple

grep has many nice flags, such as:

$ grep -n apple myfile.txt # include the line number $ grep -i apple myfile.txt # case insensitive matching $ grep --color apple myfile.txt # color the matching textAlso useful are what I call the ABCs of Grep—that's After, Before, Context. Here's what they do:

$ grep -A1 apple myfile.txt # return lines with the match, # as well as 1 after

$ grep -B2 apple myfile.txt # return lines with the match, # as well as 2 before

$ grep -C3 apple myfile.txt # return lines with the match, # as well as 3 before and after.You can do an inverse grep with the -v flag. Find lines that don't contain apple:

$ grep -v apple myfile.txt # return lines that don't contain apple

Find any occurrence of apple in any file in a directory with the recursive flag:

$ grep -R apple mydirectory/ # search for apple in any file in mydirectory

grep works nicely in combination with history:

$ history | grep apple # find commands in history containing "apple"

Exit after, say, finding the first two instances of apple so you don't waste more time searching:

$ cat myfile.txt | grep -m 2 appleThere are more powerful variants of grep, like egrep, which permits the use of regular expressions, as in:

$ egrep "apple|orange" myfile.txt # return lines with apple OR orange

as well as other fancier grep-like tools, such as ack, available for download.

For some reason—perhaps because it's useful in a quick and dirty sort of a way and doesn't have any other meanings in English—grep has inspired a peculiar cult following.

There are grep t-shirts, grep memes, a grep function in Perl, and—unbelievably—even a whole O'Reilly book devoted to the command:

Tip: If you're a Vim user, running grep as a system command within Vim is a neat way to filter text. Read more about it in Wiki Vim - System Commands in Vim.

Tip: Recently a grep-like tool called fzf has become popular. The authors call it a "general-purpose command-line fuzzy finder".

which

which shows you the path of a command in your PATH. For example, on my computer:$ which less /usr/bin/less

$ which cat /bin/cat

$ which rm /bin/rmIf there is more than one of the same command in your PATH, which will show you the one which you're using (i.e., the first one in your PATH). Suppose your PATH is the following:

$ echo $PATH /home/username/mydir:/usr/local/bin:/bin:/usr/bin:/usr/local/sbinAnd suppose myscript.py exists in 2 locations in your PATH:

$ ls /home/username/mydir/myscript.py /home/username/mydir/myscript.py

$ ls /usr/local/bin/myscript.py /usr/local/bin/myscript.pyThen:

$ which myscript.py

/home/username/mydir/myscript.py # this copy of the script has precedence

You can use which in if-statements to test for dependencies:

if ! which mycommand > /dev/null; then echo "command not found"; fiMy friend, Albert, wrote a nice tool called catwhich, which cats the file returned by which:

#!/bin/bash

# cat a file in your path

file=$(which $1 2>/dev/null)

if [[ -f $file ]]; then

cat $file

else

echo "file \"$1\" does not exist!"

fi

This is useful for reading files in your PATH without having to track down exactly what directory you put them in.

chmod

From An Introduction to the Command-Line (on Unix-like systems) - chmod: chmod adds or removes permissions from files or directories. In unix there are three spheres of permissions:- u - user

- g - group

- o - other/world

- r - read

- w - write

- x - execute

$ ls -hl myfileWe can mix and match permission types and entities how we like, using a plus sign to grant permissions according to the syntax:

chmod entity+permissiontype

or a minus sign to remove permissions:

chmod entity-permissiontype

E.g.:

$ chmod u+x myfile # make executable for you $ chmod g+rxw myfile # add read write execute permissions for the group $ chmod go-wx myfile # remove write execute permissions for the group # and for everyone else (excluding you, the user)You can also use a for "all of the above", as in:

$ chmod a-rwx myfile # remove all permissions for you, the group, # and the rest of the worldIf you find the above syntax cumbersome, there's a numerical shorthand you can use with chmod. The only three I have memorized are 000, 777, and 755:

$ chmod 000 myfile # revoke all permissions (---------) $ chmod 777 myfile # grant all permissions (rwxrwxrwx) $ chmod 755 myfile # reserve write access for the user, # but grant all other permissions (rwxr-xr-x)Read more about the numeric code here. In general, it's a good practice to allow your files to be writable by you alone, unless you have a compelling reason to share access to them.

chown

chown, less commonly seen than its cousin chmod, changes the owner of a file. As an example, suppose myfile is owned by root, but you want to grant ownership to ubuntu (the default user on ubuntu) or ec2-user (the default user on Amazon Linux). The syntax is:$ sudo chown ec2-user myfileIf you want to change both the user to someuser and the group to somegroup, use:

$ sudo chown someuser:somegroup myfileIf you want to change the user and group to your current user, chown works well in combination with whoami:

$ sudo chown $( whoami ):$( whoami ) myfile

history

From An Introduction to the Command-Line (on Unix-like systems) - history: Bash is a bit like the NSA in that it's secretly recording everything you do—at least on the command line. But fear not: unless you're doing something super unsavory, you want your history preserved because having access to previous commands you've entered in the shell saves a lot of labor. You can see your history with the command history:$ historyThe easiest way to search the history, as we've seen, is to bind the Readline Function history-search-backward to something convenient, like the up arrow. Then you just press up to scroll backwards through your history. So, if you enter a c, pressing up will step through all the commands you entered that began with c. If you want to find a command that contains the word apple, a useful tool is reverse intelligent search, which is invoked with Cntrl-r:

(reverse-i-search)`':

Although we won't cover unix pipelines until the next section, I can't resist giving you a sneak-preview.

An easier way to find any commands you entered containing apple is:

$ history | grep appleIf you wanted to see the last 10 commands you entered, it would be:

$ history | tailWhat's going on under the hood is somewhat complicated. Bash actually records your command history in two ways: (1) it stores it in memory—the history list—and (2) it stores it in a file—the history file. There are a slew of global variables that mediate the behavior of history, but some important ones are:

- HISTFILE - the path to the history file

- HISTSIZE - how many commands to store in the history list (memory)

- HISTFILESIZE - how many commands to store in the history file

~/.bash_history

It's important to understand the interplay between the history list and the history file.

In the default setup, when you type a command at the prompt it goes into your history list—and is thus revealed via history—but it doesn't get written into your history file until you log out.

The next time you log in, the contents of your history file are automatically read into memory (your history list) and are thus searchable in your session.

So, what's the difference between the following two commands?

$ history | tail $ tail ~/.bash_historyThe former will show you the last 10 commands you entered in your current session while the later will show you last 10 commands from the previous session. This is all well and good, but let's imagine the following scenario: you're like me and you have 5 or 10 different terminal windows open at the same time. You're constantly switching windows and entering commands, but history-search is such a useful function that you want any command you enter in any one of the 5 windows to be immediately accessible on all the others. We can accomplish this by putting the following lines in our setup dotfile (.bash_profile):

# append history to history file as you type in commands shopt -s histappend export PROMPT_COMMAND='history -a'How does this bit of magic work? Here's what the histappend option does, to quote from the man page of shopt:

If set, the history list is appended to the history file when the shell exits, rather than overwriting the history file.Let's break this down. shopt stands for "shell options" and is for enabling and disabling various miscellaneous options. The

shopt -s histappend

To append every line to history individually set:

PROMPT_COMMAND='history -a'

With these two settings, a new shell will get the history lines from all previous shells instead of the default 'last window closed'>history (the history file is named by the value of the HISTFILE variable)

history -a

part will "Append the new history lines (history lines entered since the beginning of the current Bash session) to the history file" (as the man page says).

And the global variable PROMPT_COMMAND is a rather funny one that executes whatever code is stored in it right before each printing of the prompt.

Put these together and you're immediately updating the ~/.bash_history file with every command instead of waiting for this to happen at logout.

Since every shell can contribute in real time, you can run multiple shells in parallel and have access to a common history on all of them—a good situation.

What if you are engaged in unsavory behavior and want to cover your tracks? You can clear your history as follows:

$ history -c # clear the history list in memory

However, this only deletes what's in memory.

If you really want to be careful, you had better check your history file, .bash_history, and delete any offending portions.

Or just wipe the whole thing:

$ echo > ~/.bash_historyYou can read more about history on gnu.org.

A wise man once said, "Those who don't know history are destined to [re-type] it." Often it's necessary to retrace your footsteps or repeat some commands. In particular, if you're doing research, you'll be juggling lots of scripts and files and how you produced a file can quickly become mysterious. To deal with this, I like to selectively keep shell commands worth remembering in a "notes" file. If you looked on my computer, you'd see notes files scattered everywhere. You might protest: but these are in your history, aren't they? Yes, they are, but the history is gradually erased; it's clogged with trivial commands like ls and cd; and it doesn't have information about where the command was run. A month from now, your ~/.bash_history is unlikely to have that long command you desperately need to remember, but your curated notes file will.

To make this easy I use the following alias:

alias n="history | tail -2 | head -1 | tr -s ' ' | cut -d' ' -f3- | awk '{print \"# \"\$0}' >> notes"

or equivalently:

alias n="echo -n '# ' >> notes && history | tail -2 | head -1 | tr -s ' ' | cut -d' ' -f3- >> notes"To use this, just type n (for notesappend) after a command:

$ ./long_hard-to-remember_command --with_lots --of_flags > poorly_named_file $ nNow a notes file has been created (or appended to) in our cwd and we can look at it:

$ cat notes # ./long_hard-to-remember_command --with_lots --of_flags > poorly_named_fileI use notesappend almost as much as I use the regular unix utilities.

clear

clear clears your screen:$ clearIt gives you a clean slate without erasing any of your commands, which are still preserved if you scroll up. clear works in some other shells, such as ipython's. In most shells—including those where clear doesn't work, like MySQL's and Python's—you can use Cntrl-l to clear the screen.

logout, exit

Both logout and exit do exactly what their name suggests—quit your current session. Another way of doing the same thing is the key combination Cntrl-D, which works across a broad variety of different shells, including Python's and MySQL's.sudo

Based off of An Introduction to the Command-Line (on Unix-like systems) - sudo and the Root User: sudo and the Root User sounds like one of Aesop's Fables, but the moralist never considered file permissions and system administration a worthy subject. We saw above that, if you're unsure of your user name, the command whoami tells you who you're logged in as. If you list the files in the root directory:$ ls -hl /you'll notice that these files do not belong to you but, rather, have been created by another user named root. Did you know you were sharing your computer with somebody else? You're not exactly, but there is another account on your computer for the root user who has, as far as the computer's concerned, all the power (read Wikipedia's discussion about the superuser here). It's good that you're not the root user by default because it protects you from yourself. You don't have permission to catastrophically delete an important system file.

If you want to run a command as the superuser, you can use sudo. For example, you'll have trouble if you try to make a directory called junk in the root directory:

$ mkdir /junk mkdir: cannot create directory ‘/junk’: Permission deniedHowever, if you invoke this command as the root user, you can do it:

$ sudo mkdir /junkprovided you type in the password. Because root—not your user—owns this file, you also need sudo to remove this directory:

$ sudo rmdir /junkIf you want to experience life as the root user, try:

$ sudo -iHere's what this looks like on my computer:

$ whoami # check my user name oliver $ sudo -i # simulate login as root Password: $ whoami # check user name now root $ exit # logout of root account logout $ whoami # check user name again oliverObviously, you should be cautious with sudo. When might using it be appropriate? The most common use case is when you have to install a program, which might want to write into a directory root owns, like /usr/bin, or access system files. I discuss installing new software below. You can also do terrible things with sudo, such as gut your whole computer with a command so unspeakable I cannot utter it in syntactically viable form. That's sudo are em dash are eff forward slash—it lives in infamy in an Urban Dictionary entry.

You can grant root permissions to various users by tinkering with the configuration file:

/etc/sudoers

which says: "Sudoers allows particular users to run various commands as the root user, without needing the root password."

Needless to say, only do this if you know what you're doing.

su

su switches the user you are in the terminal. For example, to change to the user jon:$ su jonwhere you have to know jon's password.

wc

wc counts the number of words, lines, or characters in a file.Count lines:

$ cat myfile.txt aaa bbb ccc ddd

$ cat myfile.txt | wc -l 4Count words:

$ echo -n joe | wc -w 1Count characters:

$ echo -n joe | wc -c 3

sort

From An Introduction to the Command-Line (on Unix-like systems) - sort: As you guessed, the command sort sorts files. It has a large man page, but we can learn its basic features by example. Let's suppose we have a file, testsort.txt, such that:$ cat testsort.txt vfw 34 awfjo a 4 2 f 10 10 beb 43 c f 2 33 f 1 ?Then:

$ sort testsort.txt a 4 2 beb 43 c f 1 ? f 10 10 f 2 33 vfw 34 awfjoWhat happened? The default behavior of sort is to dictionary sort the rows of a file according to what's in the first column, then second column, and so on. Where the first column has the same value—f in this example—the values of the second column determine the order of the rows. Dictionary sort means that things are sorted as they would be in a dictionary: 1,2,10 gets sorted as 1,10,2. If you want to do a numerical sort, use the -n flag; if you want to sort in reverse order, use the -r flag. You can also sort according to a specific column. The notation for this is:

sort -kn,m

where n and m are numbers which refer to the range column n to column m.

In practice, it may be easier to use a single column rather than a range so, for example:

sort -k2,2

means sort by the second column (technically from column 2 to column 2).

To sort numerically by the second column:

$ sort -k2,2n testsort.txt f 1 ? f 2 33 a 4 2 f 10 10 vfw 34 awfjo beb 43 cAs is often the case in unix, we can combine flags as much as we like.

Question: what does this do?

$ sort -k1,1r -k2,2n testsort.txt vfw 34 awfjo f 1 ? f 2 33 f 10 10 beb 43 c a 4 2Answer: the file has been sorted first by the first column, in reverse dictionary order, and then—where the first column is the same—by the second column in numerical order. You get the point!

Sort uniquely:

$ sort -u testsort.txt # sort uniquely

Sort using a designated tmp directory:

$ sort -T /my/tmp/dir testsort.txt # sort using a designated tmp directory

Behind the curtain, sort does its work by making temporary files, and it needs a place to put those files.

By default, this is the directory set by TMPDIR, but if you have a giant file to sort, you might have reason to instruct sort to use another directory and that's what this flag does.

Sort numerically if the columns are in scientific notation:

$ sort -g testsort.txtsort works particularly well with uniq. For example, look at the following list of numbers:

$ echo "2 2 2 1 2 1 3 4 5 6 6" | tr " " "\n" | sort 1 1 2 2 2 2 3 4 5 6 6Find the duplicate entries:

$ echo "2 2 2 1 2 1 3 4 5 6 6" | tr " " "\n" | sort | uniq -d 1 2 6Find the non-duplicate entries:

$ echo "2 2 2 1 2 1 3 4 5 6 6" | tr " " "\n" | sort | uniq -u 3 4 5

ssh

As discussed in An Introduction to the Command-Line (on Unix-like systems) - ssh, the Secure Shell (ssh) protocol is as fundamental as tying your shoes. Suppose you want to use a computer, but it's not the computer that's in front of you. It's a different computer in some other location—say, at your university or on the Amazon cloud. ssh is the command that allows you to log into a computer remotely over the network. Once you've sshed into a computer, you're in its shell and can run commands on it just as if it were your personal laptop. To ssh, you need to know the address of the host computer you want to log into, your user name on that computer, and the password. The basic syntax is:

ssh username@host

For example:

$ ssh username@myhost.university.eduIf you have a Macintosh, you can allow users to ssh into your personal computer by turning ssh access on. Go to:

System Preferences > Sharing > Remote Login

ssh with the flag -Y to enable X11 (X Window System) forwarding:

$ ssh -Y username@myhost.university.eduCheck that this succeeded:

$ echo $DISPAY # this should not be empty $ xclock # this should pop open X11's xclockssh also allows you to run a command on the remote server without logging in. For instance, to list of the contents of your remote computer's home directory, you could run:

$ ssh -Y username@myhost.university.edu "ls -hl"Cool, eh?

The file:

~/.ssh/config

determines ssh's behavior and you can create it if it doesn't exist.

In the next section, you'll see how to modify this config file to use an IdentityFile, so that you're spared the annoyance of typing in your password every time you ssh (see also Nerderati: Simplify Your Life With an SSH Config File).

If you're frequently getting booted from a remote server after periods of inactivity, trying putting something like this into your config file:

ServerAliveInterval = 300If this is your first encounter with ssh, you'd be surprised how much of the work of the world is done by ssh. It's worth reading the extensive man page, which gets into matters of computer security and cryptography.

ssh-keygen

On your own private computer, you can ssh into a particular server without having to type in your password. To set this up, first generate rsa ssh keys:$ mkdir -p ~/.ssh && cd ~/.ssh $ ssh-keygen -t rsa -b 4096 -f localkeyThis will create two files on your computer, a public key:

~/.ssh/localkey.pub

and a private key:

~/.ssh/localkey

You can share your public key, but do not give anyone your private key!

Suppose you want to ssh into myserver.com.

Normally, that's:

$ ssh myusername@myserver.comAdd these lines to your ~/.ssh/config file:

Host Myserver

HostName myserver.com

User myusername

IdentityFile ~/.ssh/localkey

Now cat your public key and paste it into:

~/.ssh/authorized_keys

on the remote machine (i.e., on myserver.com).

Now on your local computer, you can ssh without a password:

$ ssh MyserverYou can also use this technique to push to github.com, without having to punch your password in each time, as described here.

scp

If you have ssh access to a remote computer and want to copy its files to your local computer, you can use scp according to the syntax:

scp username@host:/some/path/on/remote/machine /some/path/on/my/machine

However, I would advise using rsync instead of scp: it does the same thing only better.

rsync

rsync can remotely sync files to or from a computer to which you have ssh access. The basic syntax is:

rsync source destination

For example, copy files from a remote machine:

$ rsync username@host:/some/path/on/remote/machine /some/path/on/my/machine/Copy files to a remote machine:

$ rsync /some/path/on/my/machine username@host:/some/path/on/remote/machine/You can also use it to sync two directories on your local machine:

$ rsync directory1 directory2The great thing about rsync is that, if it fails or you stop it in the middle, you can re-start it and it will pick up where it left off. It does not blindly copy directories (or files) but rather syncronizes them—which is to say, makes them the same. If, for example, you try to copy a directory that you already have, rsync will detect this and transfer no files.

I like to use the flags:

$ rsync -azv --progress username@myhost.university.edu:/my/source /my/destination/The -a flag is for archive, which "ensures that symbolic links, devices, attributes, permissions, ownerships, etc. are preserved in the transfer"; the -z flag compresses files during the transfer; -v is for verbose; and --progress shows you your progress. I've enshrined this in an alias:

alias yy="rsync -azv --progress"Preview rsync's behavior without actually transferring any files:

$ rsync --dry-run username@myhost.university.edu:/my/source /my/destination/Do not copy certain directories from the source:

$ rsync --exclude mydir username@myhost.university.edu:/my/source /my/destination/In this example, the directory /my/source/mydir will not be copied, and you can omit more directories by repeating the --exclude flag.

Copy the files pointed to by the symbolic links ("transform symlink into referent file/dir") with the --L flag:

$ rsync -azv -L --progress username@myhost.university.edu:/my/source /my/destination/

Note: using a trailing slash can alter the behavior of rsync. For example:

$ mkdir folder1 folder2 folder3 # make directories $ touch folder1/{file1,file2} # create empty files $ rsync -av folder1 folder2 # no trailing slash $ rsync -av folder1/ folder3 # trailing slashNo trailing slash copies the directory folder1 into folder2:

$ tree folder2

folder2

└── folder1

├── file1

└── file2

whereas using a trailing slash copies the files in folder1 into folder2:

$ tree folder3 folder3 ├── file1 └── file2

source, export

From An Introduction to the Command-Line (on Unix-like systems) - Source and Export: Question: if we create some variables in a script and exit, what happens to those variables? Do they disappear? The answer is, yes, they do. Let's make a script called test_src.sh such that:$ cat ./test_src.sh #!/bin/bash myvariable=54 echo $myvariableIf we run it and then check what happened to the variable on our command line, we get:

$ ./test_src.sh 54 $ echo $myvariableThe variable is undefined. The command source is for solving this problem. If we want the variable to persist, we run:

$ source ./test_src.sh 54 $ echo $myvariable 54and—voilà!—our variable exists in the shell. An equivalent syntax for sourcing uses a dot:

$ . ./test_src.sh # this is the same as "source ./test_src.sh"

54

But now observe the following.

We'll make a new script, test_src_2.sh, such that:

$ cat ./test_src_2.sh #!/bin/bash echo $myvariableThis script is also looking for $myvariable. Running it, we get:

$ ./test_src_2.shNothing! So $myvariable is defined in the shell but, if we run another script, its existence is unknown. Let's amend our original script to add in an export:

$ cat ./test_src.sh

#!/bin/bash

export myvariable=54 # export this variable

echo $myvariable

Now what happens?

$ ./test_src.sh 54 $ ./test_src_2.shStill nothing! Why? Because we didn't source test_src.sh. Trying again:

$ source ./test_src.sh 54 $ ./test_src_2.sh 54So, at last, we see how to do this. If we want access on the shell to a variable which is defined inside a script, we must source that script. If we want other scripts to have access to that variable, we must source plus export.

ln

From An Introduction to the Command-Line (on Unix-like systems) - Links: If you've ever used the Make Alias command on a Macintosh (not to be confused with the unix command alias), you've already developed intuition for what a link is. Suppose you have a file in one folder and you want that file to exist in another folder simultaneously. You could copy the file, but that would be wasteful. Moreover, if the file changes, you'll have to re-copy it—a huge ball-ache. Links solve this problem. A link to a file is a stand-in for the original file, often used to access the original file from an alternate file path. It's not a copy of the file but, rather, points to the file.To make a symbolic link, use the command ln:

$ ln -s /path/to/target/file mylinkThis produces:

mylink --> /path/to/target/filein the cwd, as ls -hl will show. Note that removing mylink:

$ rm mylinkdoes not affect our original file.

If we give the target (or source) path as the sole argument to ln, the name of the link will be the same as the source file's. So:

$ ln -s /path/to/target/fileproduces:

file --> /path/to/target/fileLinks are incredibly useful for all sorts of reasons—the primary one being, as we've already remarked, if you want a file to exist in multiple locations without having to make extraneous, space-consuming copies. You can make links to directories as well as files. Suppose you add a directory to your PATH that has a particular version of a program in it. If you install a newer version, you'll need to change the PATH to include the new directory. However, if you add a link to your PATH and keep the link always pointing to the most up-to-date directory, you won't need to keep fiddling with your PATH. The scenario could look like this:

$ ls -hl myprogram current -> version3 version1 version2 version3(where I'm hiding some of the output in the long listing format.) In contrast to our other examples, the link is in the same directory as the target. Its purpose is to tell us which version, among the many crowding a directory, we should use.

Another good practice is putting links in your home directory to folders you often use. This way, navigating to those folders is easy when you log in. If you make the link:

~/MYLINK --> /some/long/and/complicated/path/to/an/often/used/directorythen you need only type:

$ cd MYLINKrather than:

$ cd /some/long/and/complicated/path/to/an/often/used/directoryLinks are everywhere, so be glad you've made their acquaintance!

readlink

readlink provides a way to get the absolute path of a directory.Get the absolute path of the directory mydir:

$ readlink -m mydirGet the absolute path of the cwd:

$ readlink -m .Note this is different than:

$ pwdbecause I'm using the term absolute path to express not only that the path is not a relative path, but also to denote that it's free of symbolic links. So if we have the link discussed above:

~/MYLINK --> /some/long/and/complicated/path/to/an/often/used/directorythen:

$ cd ~/MYLINK $ readlink -m . /some/long/and/complicated/path/to/an/often/used/directoryOne profound annoyance: readlink doesn't work the same way on Mac unix (Darwin). To get the proper one, you need to download the GNU coreutils.

git

git is a famous utility for version control in software development written by Linus Torvalds. Everyone doing serious software development uses version control, which is related to the familiar concept of saving a file. Suppose you're writing an article or a computer program. As you progress, you save the file at different checkpoints ("file_v1", "file_v2", "file_v3"). git is an extension of this idea except, instead of saving a single file, it can take a snapshot of the state of all the files and folders in your project directory. In git jargon, this snapshot is called a "commit" and, forever after, you can revisit the state of your project at this snapshot.The popular site Github took the utility git and added servers in the cloud which (to a first approximation) respond only to git commands. This allows people to both back up their code base remotely and easily collaborate on software development.

git is such a large subject (the utility has many sub-commands) I've given it a full post here.

sleep

The command sleep pauses for an amount of time specified in seconds. For example, sleep for 5 seconds:$ sleep 5

ps, pstree, jobs, bg, fg, kill, top, htop

Borrowing from An Introduction to the Command-Line (on Unix-like systems) - Processes and Running Processes in the Background: Processes are a deep subject intertwined with topics like memory usage, threads, scheduling, and parallelization. My understanding of this stuff—which encroaches on the domain of operating systems—is narrow, but here are the basics from a unix-eye-view. The command ps displays information about your processes:$ ps # show process info $ ps -f # show verbose process infoWhat if you have a program that takes some time to run? You run the program on the command line but, while it's running, your hands are tied. You can't do any more computing until it's finished. Or can you? You can—by running your program in the background. Let's look at the command sleep which pauses for an amount of time specified in seconds. To run things in the background, use an ampersand:

$ sleep 60 & [1] 6846 $ sleep 30 & [2] 6847The numbers printed are the PIDs or process IDs, which are unique identifiers for every process running on our computer. Look at our processes:

$ ps -f UID PID PPID C STIME TTY TIME CMD 501 5166 5165 0 Wed12PM ttys008 0:00.20 -bash 501 6846 5166 0 10:57PM ttys008 0:00.01 sleep 60 501 6847 5166 0 10:57PM ttys008 0:00.00 sleep 30The header is almost self-explanatory, but what's TTY? Wikipedia tells us that this is an anachronistic name for the terminal that derives from TeleTYpewriter. Observe that bash itself is a process which is running. We also see that this particular bash—PID of 5166—is the parent process of both of our sleep commands (PPID stands for parent process ID). Like directories, processes are hierarchical. Every directory, except for the root directory, has a parent directory and so every process, except for the first process—init in unix or launchd on the Mac—has a parent process. Just as tree shows us the directory hierarchy, pstree shows us the process hierarchy:

$ pstree -+= 00001 root /sbin/launchd |-+= 00132 oliver /sbin/launchd | |-+= 00252 oliver /Applications/Utilities/Terminal.app/Contents/MacOS/Terminal | | \-+= 05165 root login -pf oliver | | \-+= 05166 oliver -bash | | |--= 06846 oliver sleep 30 | | |--= 06847 oliver sleep 60 | | \-+= 06848 oliver pstree | | \--- 06849 root ps -axwwo user,pid,ppid,pgid,commandThis command actually shows every single process on the computer, but I've cheated a bit and cut out everything but the processes we're looking at. We can see the parent-child relationships: sleep and pstree are children of bash, which is a child of login, which is a child of Terminal, and so on. With vanilla ps, every process we saw began in a terminal—that's why it had a TTY ID. However, we're probably running Safari or Chrome. How do we see these processes as well as the myriad others running on our system? To see all processes, not just those spawned by terminals, use the -A flag:

$ ps -A # show all process info $ ps -fA # show all process info (verbose)Returning to our example with sleep, we can see the jobs we're currently running with the command jobs:

$ jobs [1]- Running sleep 60 & [2]+ Running sleep 30 &What if we run our sleep job in the foreground, but it's holding things up and we want to move it to the background? Cntrl-z will pause or stop a job and the commands bg and fg set it running in either the background or the foreground.

$ sleep 60 # pause job with control-z ^Z [1]+ Stopped sleep 60 $ jobs # see jobs [1]+ Stopped sleep 60 $ bg # set running in background [1]+ sleep 60 & $ jobs # see jobs [1]+ Running sleep 60 & $ fg # bring job from background to foreground sleep 60We can also kill a job:

$ sleep 60 & [1] 7161 $ jobs [1]+ Running sleep 60 & $ kill %1 $ [1]+ Terminated: 15 sleep 60In this notation %n refers to the nth job. Recall we can also kill a job in the foreground with Cntrl-c. More generally, if we didn't submit the command ourselves, we can kill any process on our computer using the PID. Suppose we want to kill the terminal in which we are working. Let's grep for it:

$ ps -Af | grep Terminal 501 252 132 0 11:02PM ?? 0:01.66 /Applications/Utilities/Terminal.app/Contents/MacOS/Terminal 501 1653 1532 0 12:09AM ttys000 0:00.00 grep TerminalWe see that this particular process happens to have a PID of 252. grep returned any process with the word Terminal, including the grep itself. We can be more precise with awk and see the header, too:

$ ps -Af | awk 'NR==1 || $2==252' UID PID PPID C STIME TTY TIME CMD 501 252 132 0 11:02PM ?? 0:01.89 /Applications/Utilities/Terminal.app/Contents/MacOS/Terminal(that's print the first row OR anything with the 2nd field equal to 252.) Now let's kill it:

$ kill 252poof!—our terminal is gone.

Running stuff in the background is useful, especially if you have a time-consuming program. If you're scripting a lot, you'll often find yourself running something like this:

$ script.py > out.o 2> out.e &i.e., running something in the background and saving both the output and error.

Two other commands that come to mind are time, which times how long your script takes to run, and nohup ("no hang up"), which allows your script to run even if you quit the terminal:

$ time script.py > out.o 2> out.e & $ nohup script.py > out.o 2> out.e &As we mentioned above, you can also set multiple jobs to run in the background, in parallel, from a loop:

$ for i in 1 2 3; do { echo "***"$i; sleep 60 & } done

(Of course, you'd want to run something more useful than sleep!)

If you're lucky enough to work on a large computer cluster shared by many users—some of whom may be running memory- and time-intensive programs—then scheduling different users' jobs is a daily fact of life.

At work we use a queuing system called the Sun Grid Engine to solve this problem.

I wrote a short SGE wiki here.

To get a dynamic view of your processes, loads of other information, and sort them in different ways, use top:

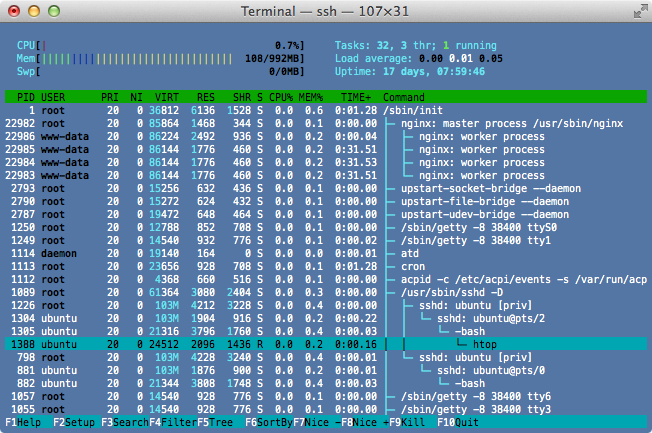

$ topHere's a screenshot of htop—a souped-up version of top—running on Ubuntu:

htop can show us a traditional top output split-screened with a process tree. We see various users—root, ubuntu, and www-data—and we see various processes, including init which has a PID of 1. htop also shows us the percent usage of each of our cores—here there's only one and we're using just 0.7%. Can you guess what this computer might be doing? I'm using it to host a website, as the process for nginx, a popular web server, gives away.

nohup

As we mentioned above, nohup ("no hang up") allows your script to run even if you quit the terminal, as in:$ nohup script.py > out.o 2> out.e &If you start such a process, you can squelch it by using kill on its process ID or, less practically, by shutting down your computer. You can track down your process with:

$ ps -Af | grep script.pynohup is an excellent way to make a job running in the background more robust.

time

As we mentioned above, time tells you how long your script or command took to run. This is useful for benchmarking the efficency of your program, among other things. For example, to time sleep:$ time sleep 10 real 0m10.003s user 0m0.000s sys 0m0.000sHow do you tell how much memory a program or command consumed? Contrary to what its name suggests, the time command also gives us this information with the -v flag:

$ time -v sleep 10 -bash: -v: command not foundWhat?! We got an error. Why?

$ type time time is a shell keywordConfusingly, the reason is that time is actually a keyword, so we can't invoke it with flags as usual. To use it, we have to call the program by its full path:

$ /usr/bin/time -v sleep 10 Command being timed: "sleep 10" User time (seconds): 0.00 System time (seconds): 0.00 Percent of CPU this job got: 0% Elapsed (wall clock) time (h:mm:ss or m:ss): 0:10.00 Average shared text size (kbytes): 0 Average unshared data size (kbytes): 0 Average stack size (kbytes): 0 Average total size (kbytes): 0 Maximum resident set size (kbytes): 2560 Average resident set size (kbytes): 0 Major (requiring I/O) page faults: 0 Minor (reclaiming a frame) page faults: 202 Voluntary context switches: 2 Involuntary context switches: 0 Swaps: 0 File system inputs: 0 File system outputs: 0 Socket messages sent: 0 Socket messages received: 0 Signals delivered: 0 Page size (bytes): 4096 Exit status: 0Now we get the information payload.

seq

seq prints a sequence of numbers.Display numbers 1 through 5:

$ seq 1 5 1 2 3 4 5You can achieve the same result with:

$ echo {1..5} | tr " " "\n"

1

2

3

4

5

If you add a number in the middle of your seq range, this will be the "step":

$ seq 1 2 10 1 3 5 7 9

cut

cut cuts one or more columns from a file, and delimits on tab by default. Suppose a file, sample.blast.txt, is:TCONS_00007936|m.162 gi|27151736|ref|NP_006727.2| 100.00 324 TCONS_00007944|m.1236 gi|55749932|ref|NP_001918.3| 99.36 470 TCONS_00007947|m.1326 gi|157785645|ref|NP_005867.3| 91.12 833 TCONS_00007948|m.1358 gi|157785645|ref|NP_005867.3| 91.12 833Then:

$ cat sample.blast.txt | cut -f2 gi|27151736|ref|NP_006727.2| gi|55749932|ref|NP_001918.3| gi|157785645|ref|NP_005867.3| gi|157785645|ref|NP_005867.3|You can specify the delimiter with the -d flag, so:

$ cat sample.blast.txt | cut -f2 | cut -f4 -d"|" NP_006727.2 NP_001918.3 NP_005867.3 NP_005867.3although this is long-winded and in this case we can achieve the same result simply with:

$ cat sample.blast.txt | cut -f5 -d"|" NP_006727.2 NP_001918.3 NP_005867.3 NP_005867.3Don't confuse cut with its non-unix namesake on Macintosh, which deletes text while copying it to the clipboard.

Tip: If you're a Vim user, running cut as a system command within Vim is a neat way to filter text. Read more: Wiki Vim - System Commands in Vim.

paste

paste joins files together in a column-wise fashion. Another way to think about this is in contrast to cat, which joins files vertically. For example:$ cat file1.txt a b c

$ cat file2.txt 1 2 3

$ paste file1.txt file2.txt a 1 b 2 c 3Paste with a delimiter:

$ paste -d";" file1.txt file2.txt a;1 b;2 c;3As with cut, paste's non-unix namesake on Macintosh—printing text from the clipboard—is a different beast entirely.

A neat feature of paste is the ability to put different rows of a file on the same line. For example, if the file sample.fa is:

>TCONS_00046782 FLLRQNDFHSVTQAGVQWCDLGSLQSLPPRLKQISCLSLLSSWDYRHRPPHPAFFLFFFLF >TCONS_00046782 MRWHMPIIPALWEAEVSGSPDVRSLRPTWPTTPSLLKTKNKTKQNISWAWCMCL >TCONS_00046782 MFCFVLFFVFSRDGVVGQVGLKLLTSGDPLTSASQSAGIIGMCHRIQPWLLIY(This is fasta format from bioinformatics). Then:

$ cat sample.fa | paste - - >TCONS_00046782 FLLRQNDFHSVTQAGVQWCDLGSLQSLPPRLKQISCLSLLSSWDYRHRPPHPAFFLFFFLF >TCONS_00046782 MRWHMPIIPALWEAEVSGSPDVRSLRPTWPTTPSLLKTKNKTKQNISWAWCMCL >TCONS_00046782 MFCFVLFFVFSRDGVVGQVGLKLLTSGDPLTSASQSAGIIGMCHRIQPWLLIYAnd you can do this with as many dashes as you like.

awk

From An Introduction to the Command-Line (on Unix-like systems) - awk: awk and sed are command line utilities which are themselves programming languages built for text processing. As such, they're vast subjects as these huge manuals—GNU Awk Guide, Bruce Barnett's Awk Guide, GNU Sed Guide—attest. Both of these languages are almost antiques which have been pushed into obsolescence by Perl and Python. For anything serious, you probably don't want to use them. However, their syntax makes them useful for simple parsing or text manipulation problems that crop up on the command line. Writing a simple line of awk can be faster and less hassle than hauling out Perl or Python.The key point about awk is, it works line by line. A typical awk construction is:

cat file.txt | awk '{ some code }'

Awk executes its code once every line.

Let's say we have a file, test.txt, such that:

$ cat test.txt 1 c 3 c 2 t 1 cIn awk, the notation for the first field is $1, $2 is for second, and so on. The whole line is $0. For example:

$ cat test.txt | awk '{print}' # print full line

1 c

3 c

2 t

1 c

$ cat test.txt | awk '{print $0}' # print full line

1 c

3 c

2 t

1 c

$ cat test.txt | awk '{print $1}' # print col 1

1

3

2

1

$ cat test.txt | awk '{print $2}' # print col 2

c

c

t

c

There are two exceptions to the execute code per line rule: anything in a BEGIN block gets executed before the file is read and anything in an END block gets executed after it's read.

If you define variables in awk they're global and persist rather than being cleared every line.

For example, we can concatenate the elements of the first column with an @ delimiter using the variable x:

$ cat test.txt | awk 'BEGIN{x=""}{x=x"@"$1; print x}'

@1

@1@3

@1@3@2

@1@3@2@1

$ cat test.txt | awk 'BEGIN{x=""}{x=x"@"$1}END{print x}'

@1@3@2@1

Or we can sum up all values in the first column:

$ cat test.txt | awk '{x+=$1}END{print x}' # x+=$1 is the same as x=x+$1

7

Awk has a bunch of built-in variables which are handy: NR is the row number; NF is the total number of fields; and OFS is the output delimiter.

There are many more you can read about here.

Continuing with our very contrived examples, let's see how these can help us:

$ cat test.txt | awk '{print $1"\t"$2}' # write tab explicitly

1 c

3 c

2 t

1 c

$ cat test.txt | awk '{OFS="\t"; print $1,$2}' # set output field separator to tab

1 c

3 c

2 t

1 c

Setting OFS spares us having to type a "\t" every time we want to print a tab.

We can just use a comma instead.

Look at the following three examples:

$ cat test.txt | awk '{OFS="\t"; print $1,$2}' # print file as is

1 c

3 c

2 t

1 c

$ cat test.txt | awk '{OFS="\t"; print NR,$1,$2}' # print row num

1 1 c

2 3 c

3 2 t

4 1 c

$ cat test.txt | awk '{OFS="\t"; print NR,NF,$1,$2}' # print row & field num

1 2 1 c

2 2 3 c

3 2 2 t

4 2 1 c

So the first command prints the file as it is.

The second command prints the file with the row number added in front.

And the third prints the file with the row number in the first column and the number of fields in the second—in our case always two.

Although these are purely pedagogical examples, these variables can do a lot for you.

For example, if you wanted to print the 3rd row of your file, you could use:

$ cat test.txt | awk '{if (NR==3) {print $0}}' # print the 3rd row of your file

2 t

$ cat test.txt | awk '{if (NR==3) {print}}' # same thing, more compact syntax

2 t

$ cat test.txt | awk 'NR==3' # same thing, most compact syntax

2 t

Sometimes you have a file and you want to check if every row has the same number of columns.

Then use:

$ cat test.txt | awk '{print NF}' | sort -u

2

In awk $NF refers to the contents of the last field:

$ cat test.txt | awk '{print $NF}'

c

c

t

c

An important point is that by default awk delimits on white-space, not tabs (unlike, say, cut).

White space means any combination of spaces and tabs.

You can tell awk to delimit on anything you like by using the -F flag.

For instance, let's look at the following situation:

$ echo "a b" | awk '{print $1}'

a

$ echo "a b" | awk -F"\t" '{print $1}'

a b

When we feed a space b into awk, $1 refers to the first field, a.

However, if we explicitly tell awk to delimit on tabs, then $1 refers to a b because it occurs before a tab.

You can also use shell variables inside your awk by importing them with the -v flag:

$ x=hello

$ cat test.txt | awk -v var=$x '{ print var"\t"$0 }'

hello 1 c

hello 3 c

hello 2 t

hello 1 c

And you can write to multiple files from inside awk:

$ cat test.txt | awk '{if ($1==1) {print > "file1.txt"} else {print > "file2.txt"}}'

$ cat file1.txt 1 c 1 c

$ cat file2.txt 3 c 2 tFor loops in awk:

$ echo joe | awk '{for (i = 1; i <= 5; i++) {print i}}'

1

2

3

4

5

Question: In the following case, how would you print the row numbers such that the first field equals the second field?

$ echo -e "a\ta\na\tc\na\tz\na\ta" a a a c a z a aHere's the answer:

$ echo -e "a\ta\na\tc\na\tz\na\ta" | awk '$1==$2{print NR}'

1

4

Question: How would you print the average of the first column in a text file?

$ cat file.txt | awk 'BEGIN{x=0}{x=x+$1;}END{print x/NR}'

NR is a special variable representing row number.

The take-home lesson is, you can do tons with awk, but you don't want to do too much. Anything that you can do crisply on one, or a few, lines is awk-able. For more involved scripting examples, see An Introduction to the Command-Line (on Unix-like systems) - More awk examples.

sed

From An Introduction to the Command-Line (on Unix-like systems) - sed: Sed, like awk, is a full-fledged language that is convenient to use in a very limited sphere (GNU Sed Guide). I mainly use it for two things: (1) replacing text, and (2) deleting lines. Sed is often mentioned in the same breath as regular expressions although, like the rest of the world, I'd use Perl and Python when it comes to that. Nevertheless, let's see what sed can do.Sometimes the first line of a text file is a header and you want to remove it. Then:

$ cat test_header.txt This is a header 1 asdf 2 asdf 2 asdf

$ cat test_header.txt | sed '1d' # delete the first line

1 asdf

2 asdf

2 asdf

To remove the first 3 lines:

$ cat test_header.txt | sed '1,3d' # delete lines 1-3

2 asdf

1,3 is sed's notation for the range 1 to 3.

We can't do much more without entering regular expression territory.

One sed construction is:

/pattern/d

where d stands for delete if the pattern is matched.

So to remove lines beginning with #:

$ cat test_comment.txt 1 asdf # This is a comment 2 asdf # This is a comment 2 asdf

$ cat test_comment.txt | sed '/^#/d' 1 asdf 2 asdf 2 asdfAnother construction is:

s/A/B/

where s stands for substitute.

So this means replace A with B.

By default, this only works for the first occurrence of A, but if you put a g at the end, for group, all As are replaced:

s/A/B/g

For example:

$ # replace 1st occurrence of kitty with X

$ echo "hello kitty. goodbye kitty" | sed 's/kitty/X/'

hello X. goodbye kitty

$ # same thing. using | as a separator is ok

$ echo "hello kitty. goodbye kitty" | sed 's|kitty|X|'

hello X. goodbye kitty

$ # replace all occurrences of kitty

$ echo "hello kitty. goodbye kitty" | sed 's/kitty/X/g'

hello X. goodbye X

This is such a useful ability that all text editors allow you to perform find-and-replace as well.

By replacing some text with nothing, you can also use this as a delete:

$ echo "hello kitty. goodbye kitty" | sed 's|kitty||' hello . goodbye kitty

$ echo "hello kitty. goodbye kitty" | sed 's|kitty||g' hello . goodbyeSed is especially good when you're trying to rename batches of files on the command line. I often have occasion to use it in for loops:

$ touch file1.txt file2.txt file3.txt $ for i in file*; do echo $i; j=$( echo $i | sed 's|.txt|.html|'); mv $i $j; done file1.txt file2.txt file3.txt $ ls file1.html file2.html file3.htmlSed has the ability to edit files in place with the -i flag—that is to say, modify the file wihout going through the trouble of creating a new file and doing the re-naming dance. For example, to add the line This is a header to the top of myfile.txt:

$ sed -i '1i This is a header' myfile.txtDelete the first line—e.g., a header—from a file:

$ sed -i '1d' myfile.txt

date, cal

date prints the date:$ date Sat Mar 21 18:23:56 EDT 2014You can choose among many formats. For example, if the date were March 10, 2012, then:

$ date "+%y%m%d" 120310

$ date "+%D" 03/10/12Print the date in seconds since 1970:

$ date "+%s"cal prints a calendar for the current month, as in:

$ cal

January

Su Mo Tu We Th Fr Sa

1

2 3 4 5 6 7 8

9 10 11 12 13 14 15

16 17 18 19 20 21 22

23 24 25 26 27 28 29

30 31

See the calendar for the whole year:

$ cal -ySee the calendar for, say, December 2011:

$ cal 12 2011

gzip, gunzip, bzip2, bunzip2

It's a good practice to conserve disk space whenever possible and unix has many file compression utilities for this purpose, gzip and bzip2 among them. File compression is a whole science unto itself which you can read about here.Zip a file:

$ gzip file(The original file disappears and only the .gz file remains)

Unzip:

$ gunzip file.gzBunzip:

$ bunzip2 file.bz2You can pipe into these commands!

$ cat file.gz | gunzip | head

$ cat file | awk '{print $1"\t"100}' | gzip > file2.gz

The first preserves the zipped file while allowing you to look at it, while the second illustrates how one may save space by creating a zipped file right off the bat (in this case, with some random awk).

cat a file without a unzipping it:

$ zcat file.gzless a file without a unzipping it:

$ zless file.gzTo emphasize the point again, if you're dealing with large data files, you should always compress them to save space.

tar

tar rolls, or glues, an entire directory structure into a single file.Tar a directory named dir into a tarball called dir.tar:

$ tar -cvf dir.tar dir(The original dir remains) The options I'm using are -c for "create a new archive containing the specified items"; -f for "write the archive to the specified file"; and -v for verbose.

To untar, use the -x flag, which stands for extract:

$ tar -xvf dir.tarTar and zip a directory dir into a zipped tarball dir.tar.gz:

$ tar -zcvf dir.tar.gz dirExtract plus unzip:

$ tar -zxvf dir.tar.gz

uniq

uniq filters a file leaving only the unique lines, provided the file is sorted. Suppose:$ cat test.txt aaaa bbbb aaaa aaaa cccc ccccThen:

$ cat test.txt | uniq aaaa bbbb aaaa ccccThis can be thought of as a local uniquing—adjacent rows are not the same, but you can still have a repeated row in the file. If you want the global unique, sort first:

$ cat test.txt | sort | uniq aaaa bbbb ccccThis is identical to:

$ cat test.txt | sort -u aaaa bbbb ccccuniq also has the ability to show you only the lines that are not unique with the duplicate flag:

$ cat test.txt | sort | uniq -d aaaa ccccAnd uniq can count the number of distinct rows in a file (provided it's sorted):

cat test.txt | sort | uniq -c

3 aaaa

1 bbbb

2 cccc

dirname, basename

dirname and basename grab parts of a file path:$ basename /some/path/to/file.txt file.txt

$ dirname /some/path/to/file.txt /some/path/toThe first gets the file name, the second the directory in which the file resides. To say the same thing a different way, dirname gets the directory minus the file, while basename gets the file minus the directory.

You can play with these:

$ ls $( dirname $( which my_program ) )This would list the files wherever my_program lives.

In a bash script, it's sometimes useful to grab the directory where the script itself resides and store this path in a variable:

# get the directory in which your script itself resides

d=$( dirname $( readlink -m $0 ) )

set, unset

Use set to set various properties of your shell, somewhat analogous to a Preferences section in a GUI.E.g., use vi style key bindings in the terminal:

$ set -o viUse emacs style key bindings in the terminal:

$ set -o emacsYou can use set with the -x flag to debug:

set -x # activate debugging from here . . . set +x # de-activate debugging from hereThis causes all commands to be echoed to std:err before they are run. For example, consider the following script:

#!/bin/bash set -eux # the other flags are: # -e Exit immediately if a simple command exits with a non-zero status # -u Treat unset variables as an error when performing parameter expansion echo hello sleep 5 echo joeThis will echo every command before running it. The output is:

+ echo hello hello + sleep 5 + echo joe joeChanging gears, you can use unset to clear variables, as in:

$ TEST=asdf $ echo $TEST asdf $ unset TEST $ echo $TEST

env

There are 3 notable things about env. First, if you run it as standalone, it will print out all the variables and functions set in your environment:$ envSecond, as discussed in An Introduction to the Command-Line (on Unix-like systems) - The Shebang, you can use env to avoid hard-wired paths in your shebang. Compare this:

#!/usr/bin/env pythonto this:

#!/some/path/pythonThe script with the former shebang will conveniently be interpreted by whichever python is first in your PATH; the latter will be interpreted by /some/path/python.

And, third, as Wikipedia says, env can run a utility "in an altered environment without having to modify the currently existing environment." I never have occasion to use it this way but, since it was in the news recently, look at this example (stolen from here):

$ env 'COLOR=RED' bash -c 'echo "My favorite color is $COLOR"' My favorite color is RED $ echo $COLORThe COLOR variable is only defined temporarily for the purposes of the env statement (when we echo it afterwards, it's empty). This construction sets us up to understand the bash shellshock bug, which Stack Exchange illustrates using env:

$ env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

I unpack this statement in a blog post.

uname

As its man page says, uname prints out various system information. In the simplest form:$ uname LinuxIf you use the -a flag for all, you get all sorts of information:

$ uname -a Linux my.system 2.6.32-431.11.2.el6.x86_64 #1 SMP Tue Mar 25 19:59:55 \ UTC 2014 x86_64 x86_64 x86_64 GNU/Linux

df, du

df reports "file system disk space usage". This is the command you use to see how many much space you have left on your hard disk. The -h flag means "human readable," as usual. To see the space consumption on my mac, for instance:$ df -h . Filesystem Size Used Avail Use% Mounted on /dev/disk0s3 596G 440G 157G 74% /df shows you all of your mounted file systems. For example, if you're familiar with Amazon Web Services (AWS) jargon, df is the command you use to examine your mounted EBS volumes. They refer to it in the "Add a Volume to Your Instance" tutorial.

du is similar to df but it's for checking the sizes of individual directories. E.g.:

$ du -sh myfolder 284M myfolderIf you wanted to check how much space each folder is using in your HOME directory, you could do:

$ cd $ du -sh *This will probably take a while. Also note that there's some rounding in the calculation of how much space folders and files occupy, so the numbers df and du return may not be exact.

Find all the files in the directory /my/dir in the gigabyte range:

$ du -sh /my/dir/* | awk '$1 ~ /G/'

bind

As discussed in detail in An Introduction to the Command-Line (on Unix-like systems) - Working Faster with Readline Functions and Key Bindings, ReadLine Functions (GNU Documentation) allow you to take all sorts of shortcuts in the terminal. You can see all the Readline Functions by entering:$ bind -P # show all Readline Functions and their key bindings $ bind -l # show all Readline FunctionsFour of the most excellent Readline Functions are:

- forward-word - jump cursor forward a word

- backward-word - jump cursor backward a word

- history-search-backward - scroll through your bash history backward

- history-search-forward - scroll through your bash history forward

- Meta-f - jump forward one word

- Meta-b - jump backward one word

- Cntrl-forward-arrow - forward-word

- Cntrl-backward-arrow - backward-word

- up-arrow - history-search-backward

- down-arrow - history-search-forward

# make cursor jump over words bind '"\e[5C": forward-word' # control+arrow_right bind '"\e[5D": backward-word' # control+arrow_left # make history searchable by entering the beginning of command # and using up and down keys bind '"\e[A": history-search-backward' # arrow_up bind '"\e[B": history-search-forward' # arrow_down(Although these may not work universally.) How does this cryptic symbology translate into these particular keybindings? Again, refer to An Introduction to the Command-Line (on Unix-like systems).

alias, unalias

As mentioned in An Introduction to the Command-Line (on Unix-like systems) - Aliases, Functions, aliasing is a way of mapping one word to another. It can reduce your typing burden by making a shorter expression stand for a longer one:

alias c="cat"

This means every time you type a c the terminal is going to read it as cat, so instead of writing:

$ cat file.txtyou just write:

$ c file.txtAnother use of alias is to weld particular flags onto a command, so every time the command is called, the flags go with it automatically, as in:

alias cp="cp -R"

or

alias mkdir="mkdir -p"

Recall the former allows you to copy directories as well as files, and the later allows you to make nested directories.

Perhaps you always want to use these options, but this is a tradeoff between convenience and freedom.

In general, I prefer to use new words for aliases and not overwrite preexisting bash commands.

Here are some aliases I use in my setup file:

# coreutils

alias c="cat"

alias e="clear"

alias s="less -S"

alias l="ls -hl"

alias lt="ls -hlt"

alias ll="ls -al"

alias rr="rm -r"

alias r="readlink -m"

alias ct="column -t"

alias ch="chmod -R 755"

alias chh="chmod -R 644"

alias grep="grep --color"

alias yy="rsync -azv --progress"

# remove empty lines with white space

alias noempty="perl -ne '{print if not m/^(\s*)$/}'"

# awk

alias awkf="awk -F'\t'"

alias length="awk '{print length}'"

# notesappend, my favorite alias

alias n="history | tail -2 | head -1 | tr -s ' ' | cut -d' ' -f3- | awk '{print \"# \"\$0}' >> notes"

# HTML-related alias htmlsed="sed 's|\&|\&\;|g; s|>|\>\;|g; s|<|\<\;|g;'"

# git alias ga="git add" alias gc="git commit -m" alias gac="git commit -a -m" alias gp="git pull" alias gpush="git push" alias gs="git status" alias gl="git log" alias gg="git log --oneline --decorate --graph --all" # alias gg="git log --pretty=format:'%h %s %an' --graph" alias gb="git branch" alias gch="git checkout" alias gls="git ls-files" alias gd="git diff"To display all of your current aliases:

$ aliasTo get rid of an alias, use unalias, as in:

$ unalias myalias

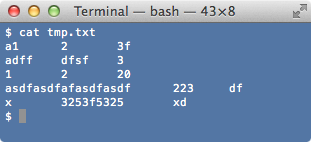

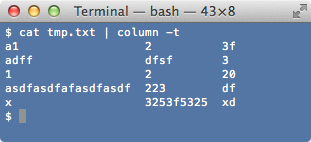

column

Suppose you have a file with fields of variable length. Viewing it in the terminal can be messy because, if a field in one row is longer than one in another, it will upset the spacing of the columns. You can remedy this as follows:cat myfile.txt | column -tThis puts your file into a nice table, which is what the -t flag stands for. Here's an illustration: